What They Are, Why They Matter, and How to Get Started

In today’s movement towards a cloud-native world, keeping your systems healthy and your users happy is no small feat. You’ve probably heard buzzwords like observability, monitoring, OpenTelemetry, and Prometheus thrown around. But what do they really mean, and how do they work together to keep your applications reliable?

Let’s break it down.

What Is Observability, and Why Use It?

At its core, observability is the ability to understand what’s happening inside a system just by looking at its external outputs. In other words, it’s your window into the inner workings of complex, distributed systems like microservices, Kubernetes clusters, or cloud-based apps.

Why do we need it?

Systems today are dynamic and distributed: VMs, containers, functions, you name it.

Failures are inevitable, and they can come from many places (network, code, infra).

You need deep visibility to answer questions like “Why is this slow?” or “What caused that spike?”

With observability in place, you can:

- Quickly detect and resolve incidents

- Optimize performance and cost

- Boost reliability and user satisfaction

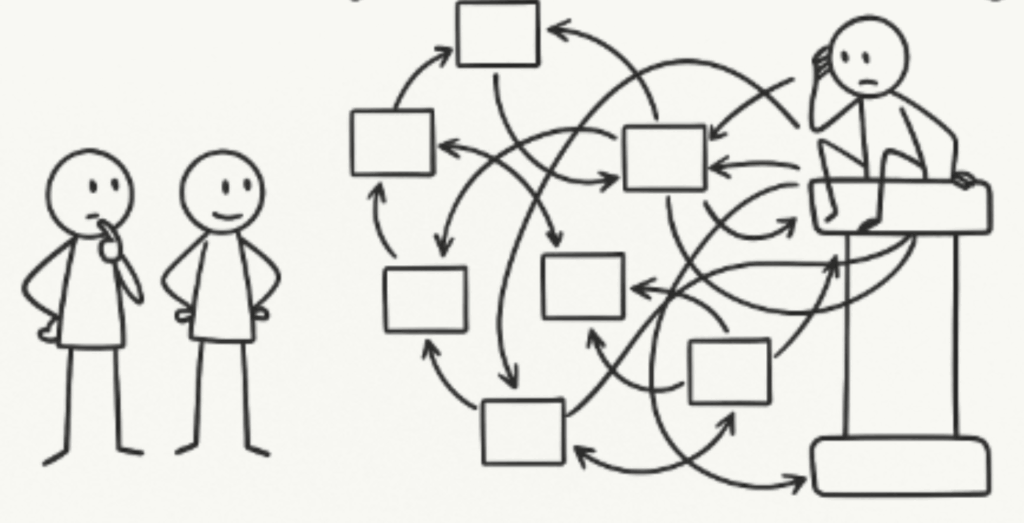

Monitoring vs Observability: What’s the Difference?

These terms are often used interchangeably, but they have different scopes:

| Monitoring | Observability |

| Tracks known metrics and health indicators | Gives deep insights into unknown issues |

| Answers: “Is it working?” | Answers: “Why is it not working?” |

| Based on pre-defined alerts (e.g., CPU > 80%) | Based on exploring data to uncover root causes |

| Good for known unknowns | Good for unknown unknowns |

TL;DR:

You could actually say that Monitoring is a subset of observability. You monitor known issues; you observe to diagnose the unknown. Unfortunately, Observability vendors and standards (OTel) usually forgets this.

Synthetic Monitoring and RUM: Part of Observability?

Yes, since Monitoring is part of Observability!

Synthetic Monitoring:

Simulates user interactions (e.g., pinging an API or loading a page) at regular intervals.

YES, it’s part of observability—it helps you proactively test availability and performance even when no real users are active.

RUM (Real User Monitoring):

Measures the actual experience of real users (page load times, errors, Core Web Vitals).

YES, this is also part of observability—it tells you how the system feels to actual users in the wild.

Together, synthetic monitoring + RUM + Otel* = a complete observability picture.

*) OpenTelemetry

The Pillars of Observability

Traditionally, observability is built on three main pillars:

- Metrics: Numeric measurements over time (e.g., CPU usage, request rates)

- Logs: Text records of events (e.g., error logs, audit trails)

- Traces: Distributed traces that show the full lifecycle of a request across systems

The new (relatively) standard for this is OpenTelemetry:

OpenTelemetry (OTel) is an open-source observability framework under the Cloud Native Computing Foundation (CNCF). It enables applications to collect, process, and export telemetry data (logs, metrics, and traces) for better monitoring and debugging.

Bonus (emerging):

Profiles: Continuous profiling data to understand CPU/memory usage in detail.

Who Should Use Observability?

- DevOps / SRE teams: For infrastructure health and service reliability

- Developers: To debug code-level issues and performance bottlenecks

- Product teams: To correlate performance with business outcomes (e.g., cart abandonment)

- Security teams: To detect anomalies and potential breaches

When Do You Need Observability?

You have complex systems (microservices, Kubernetes, cloud)

You’re experiencing hard-to-diagnose incidents

You want to shift left: catch issues earlier in the pipeline

You’re scaling and need consistent reliability

Who Should Focus on Monitoring (and Why)?

While observability gives deep insight into why something is broken, there are many cases where monitoring alone is exactly what you need—no more, no less.

Point in case: you are responsible for a service or website, and you don’t care about how the inside works, just the quality of the service provided to your users. If and when there is an issue, you just alert the DevOps team, and bash them 🙂

Monitoring is best suited for:

- Teams managing simple or stable systems:

If your application is monolithic, predictable, or not changing often, a solid monitoring setup can keep you well-covered. - Small teams with limited resources:

Monitoring is often simpler and faster to set up. Teams without the need (or time) for deep diagnostics can rely on alerts and dashboards for essential visibility. - Compliance and SLA-focused teams:

Sometimes your main concern is ensuring that key metrics (like uptime, latency) stay within defined thresholds. Monitoring is perfect for “are we OK?” checks.

Common use cases:

- Websites that need uptime checks and basic performance metrics

- IT infrastructure (network gear, databases, servers) with known health parameters

- SaaS tools where only status monitoring is required

Why Stick with Monitoring in These Cases?

- Simplicity: Easier to set up and maintain.

- Cost-effective: Less data collection and storage overhead.

- Good enough: If the system rarely changes and incidents are predictable, monitoring might fully meet your needs.

Example:

A company running a basic e-commerce site might monitor:

- HTTP status codes

- Response times

- CPU/disk/memory

If these metrics look good, there’s no pressing need to trace internal microservices or explore deep logs.

The Bottom Line:

Monitoring is like a dashboard light:

It tells you something’s wrong quickly and clearly.

Observability is like a mechanic’s toolkit:

It helps you diagnose and fix the underlying issue.

For many teams and systems, especially small, stable, or legacy environments, monitoring might be all you need—providing peace of mind and operational reliability without the extra complexity.

Typical Tools and Services

| Tool/Service (example) | Purpose | Origin |

| Datadog, New Relic, Splunk, Dash0 | Full-stack observability SaaS | Commercial vendors |

| Prometheus | Metrics collection & alerting Tool and data source | CNCF (Cloud Native Computing Foundation) |

| Grafana | Visualization & dashboards SaaS & Open Source | Grafana Labs, Commercial vendor |

| OpenTelemetry (Otel) | Standardized collection of metrics, logs, traces | CNCF |

| Jaeger | Distributed tracing | CNCF |

| Synthetics (Apica, Datadog, AWS Cloudwatch) | Synthetic monitoring | Various SaaS |

| RUM (mPulse, New Relic, Datadog) | Real user monitoring | Various SaaS |

| ELK Stack (Elasticsearch, Logstash, Kibana) | Logging & search | Elastic.co |

Not Just for Cloud-Native Systems

It’s easy to think of observability and monitoring as something built only for cloud-native environments—with Kubernetes, microservices, and serverless architectures in mind. But here’s the truth:

Observability is just as critical for traditional infrastructure and legacy systems.

Why It Matters for Traditional Infrastructure

Even if your systems are:

- Monolithic applications

- On-premises servers and VMs

- Legacy databases

- Network appliances

…you still need to answer the same core questions:

- Is my system healthy?

- How is performance trending?

- Why is something broken?

In fact, legacy environments can be even trickier to monitor because:

- Less built-in visibility (older software wasn’t designed with metrics in mind)

- Limited APIs and integrations

- Longer-lived outages and failures when things go wrong

Bringing Observability to Traditional Systems

The good news is that modern observability tools often support both cloud-native and traditional setups. For example:

- Prometheus Node Exporter monitors bare-metal and VM resources.

- Synthetic Monitoring and RUM doesn’t care how your services are implemented.

- SNMP exporters let you collect metrics from network devices and appliances.

- Log shippers (like Filebeat, Fluentd) work with any text-based logs, even from legacy systems.

- Custom scripts and plugins can expose metrics from monolithic apps.

- OpenTelemetry also supports a range of environments, and its agent-based collectors can gather data from traditional systems and forward it to your observability backend.

The Takeaway

Whether you’re running a modern Kubernetes stack or a 25-year-old Java monolith on a bare-metal server, the need for visibility doesn’t change.

In fact, bridging the gap between legacy and modern infrastructure is often the key to a holistic observability strategy—especially for organizations in the middle of digital transformation.

Observability isn’t just for the cloud—it’s for everything you care about keeping healthy and reliable.

In Conclusion

Monitoring and observability are your eyes and ears in today’s complex digital world. While monitoring tells you what’s broken, observability helps you understand why.

Whether you’re proactively testing uptime with synthetic checks, measuring real-world performance with RUM, or digging into distributed traces and metrics with Prometheus + OpenTelemetry, you’re building a system that’s robust, reliable, and user-centric.

Start with monitoring if you’re new—but aim for full observability as your systems grow.